Abstract

We present Conformal Intent Classification and Clarification (CICC), a framework for fast and accurate intent classification for task-oriented dialogue systems. The framework turns heuristic uncertainty scores of any intent classifier into a clarification question that is guaranteed to contain the true intent at a pre-defined confidence level. By disambiguating between a small number of likely intents, the user query can be resolved quickly and accurately. Additionally, we propose to augment the framework for out-of-scope detection. In a comparative evaluation using seven intent recognition datasets we find that CICC generates small clarification questions and is capable of out-of-scope detection. CICC can help practitioners and researchers substantially in improving the user experience of dialogue agents with specific clarification questions.

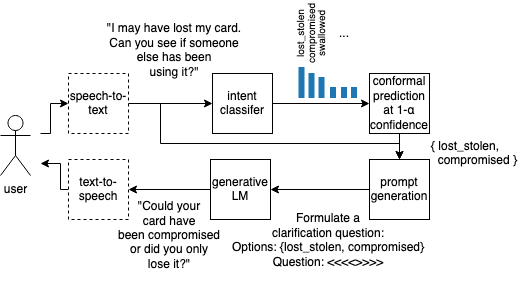

We tackle the problem of interactively classifying user intents in task-oriented dialogue

Background

Intent classification (IC) is a crucial step in the selection of actions and responses in task-oriented dialogue systems. To offer the best possible experience with such systems, IC should accurately map user inputs to a predefined set of intents. A widely known challenge of language in general, and IC specifically, is that user utterances may be incomplete, erroneous, and contain linguistic ambiguities.

Intent classification is typically tackled using (pre-trained) language models that are fine-tuned for classification on a data set collected in the wild. These classifiers output some score for each known intent. This score can be turned into a classification probability with a softmax layer.

Problem

In task-oriented dialogue systems, we need to handle the probabilistic output of an intent classifier. Continuing the conversation under the assumption that the class with the highest predicted probability is the true user intent is overly simplistic and will result in a poor user experience.

For example, if the two highest intents have predicted probabilities of 0.49 and 0.48, it is more reasonable to ask which is the true user intent in a clarification question rather than blindly assuming the most probable intent is the true user intent and continuing the conversation. Conversely, if the classifier predicts an almost equal probability for e.g. 15 intents, then it both is unreasonable to assume that the most probable intent is the true user intent whereas a clarification question to disambiguate between all fifteen intents would be tedious and burdensome on the user.

CICC formalizes when to ask a clarification and how to ask it, ensuring that:

- the true user intent is detected at a pre-specified confidence level

- that no questions are asked if the model is uncertain

- any probabilistic intent classifier can be used without re-training

CICC: Conformal Intent Classification and Clarification

CICC solves the problem of interactively classifying user intents in three simple steps:

- obtain classification scores using an intent classifier

- turn the classification scores into a set of intents that contains the true user intent at a predefined confidence level of \(1-\alpha\) say 95% with conformal prediction

- use the intent set to choose between

- continuing the conversation if the set contains only 1 item

- asking the user to disambiguate between the items if the set is smaller than a predefined threshold \(th\) of e.g. 7 items

- asking the user to reformulate if the set is of a larger size

Additionally, the clarification questions are typically small i.e. much smaller than the predefined threshold.

Parameters

CICC comes with two hyperparameters. These have intuitive interpretations and can easily be set on the calibration set.

- Confidence level \(\alpha\)

- This parameter controls how certain we want to be that the true user intent is in the predicted set of intents in step (2) of the algorithm described above. For example, for a value of \(\alpha=0.05\) we are guaranteed that the predicted intent set contains the true user intent in \(1-\alpha=0.95%\) of test inputs. Implicitly, this parameter controls the size of the prediction set: for a low alpha, we generally expect larger prediction sets. The parameter can be set depending on the performance of the intent classifier and the use case requirements. For example, if the intent classifier obtains an accuracy of 90% on the train set, it can easily be set at \(1-0.99=0.01\).

- Max prediction set size \(th\)

- This parameter controls when we consider the prediction set too large to ask a clarification question. For example, if we obtain a prediction set with twenty possible intents for a certain user input and the threshold \(th=7\), we reject this input as too ambiguous to clarify using a clarification question. Instead we ask the user to reformulate the query entirely. This parameter can be set based on interaction design knowledge and knowledge of the user base. We generally advize to set this parameter no higher than 7 to avoid overly long clarification questions.

Model, Data and Computational Requirements

The requirements of CICC are easily fulfilled in a dialogue agent setting.

CICC relies on an intent detection model or classifier that maps user input to a vector in \(\mathbb{R}^K\) for a dialogue agent that supports \(K\) intents.

So, any model that implements e.g. scikit-learn's predict_proba(), including the (softmax) output of a neural network classifier would work.

Additionally, CICC requires a relatively small calibration data set. This calibration set should formally be exchangable with the test data, i.e. it should identically but not necessarily independently distributed as the test data. In practice, such a data set can simply be obtained by collecting and labelling actual user inputs. This data set need not be large, but should ideally include some examples from all \(k \in K\) intents or classes.

The computational requirements for CICC are very limited. Calibration on the calibration set can generally be done in seconds and the conversion of classification scores to prediction sets imposes a very limited computational burden as it only requires the inclusion of intents based on a preset threshold. These operations can easily be vectorized to support dialogue agents with up to thousands of intents.

Further Reading

A key technology driving CICC is conformal prediction. Although CICC supports other types of conformal prediction, we particularly point to the usage of split-conformal prediction. Some references about (split) conformal prediction:

- Vovk, Vladimir, Alexander Gammerman, and Glenn Shafer. Algorithmic learning in a random world. Vol. 29. New York: Springer, 2005.

- Angelopoulos, Anastasios N., and Stephen Bates. Conformal prediction: A gentle introduction. Foundations and Trends® in Machine Learning 16.4 (2023): 494-591.